- Category

- Deep Learning

Federated Learning in a Nutshell

A quick overview of federated learning, exploring its core concepts, advantages, challenges, pseudocode, and use cases.

- Published on

- Last updated on:

Table of Contents

What is federated learning?

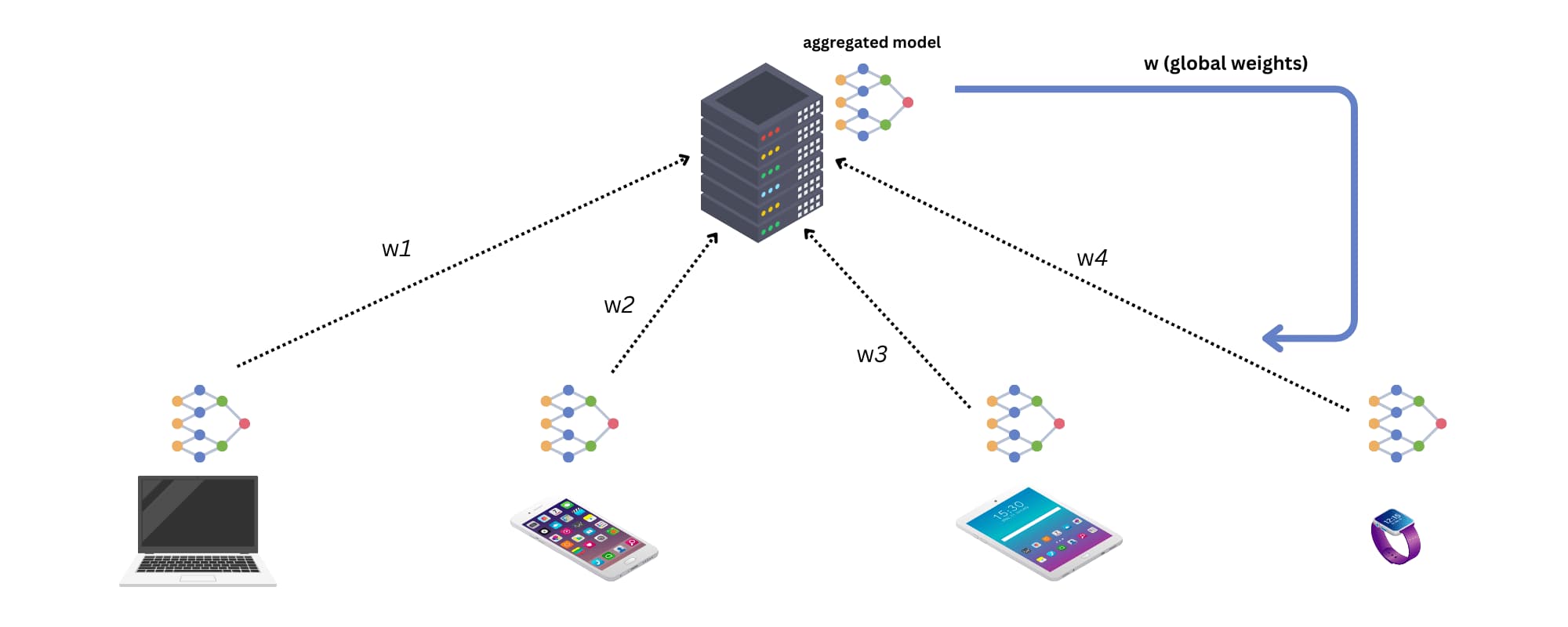

Federated Learning is a machine learning approach where a model is trained across multiple decentralized devices or servers holding local data samples, without exchanging them. This approach allows for a model to learn from valuable, possibly sensitive, decentralized data, without moving the data to a central server.

Here are the some advantages of federated learning:

- Privacy: Data is not moved to a central server, so it is not exposed to third parties.

- Cost: Training is done locally, so there is no need for a powerful central server.

- Fault tolerance: If a device or server fails, the training process can continue on other devices or servers.

Here are the some challenges of federated learning:

- Communication cost: Connected clients need to transfer their model weights to the server, and the server should send the aggrated model back to the clients to improve their local models. This process needs network communication between the clients. It may not be the case in small neural networks, but while working on image classification, you may want to work with larger models with millions of parameters to increase the accuracy of your application. And your communication cost will be proportional to the complexity of your neural network and the number of rounds (how many times you will update your model).

- Non IID data: In federated learning, the data is distributed among the clients. The data distribution may not be the same among the clients. This is called non-IID (IID: Independent and identically distributed) data. This may cause a problem in federated learning. For example, if you are working on image classification and your clients are distributed among different countries, the data distribution may not be the same. The data distribution may be different in different countries. For example, the data distribution of images of cars may be different in Turkey and Germany. This may cause a problem in federated learning. The model may not be able to generalize well.

- Unbalanced data: The data distribution may not be the same among the clients. This is called unbalanced data. Some users of your app can have much heavier use than others, which leads to varying amounts of local training data.

Federated learning pseudocode

Here is the pseudocode of federated learning:

for each round t = 1, 2, ... do

for each client k = 1, 2, ... do

c ← client k

w ← c.localTrain(w)

end for

w ← aggregate(w)

end for

In each round, the server sends the current model to the clients. The clients update their local models and send the updated models back to the server. The server aggregates the models and sends the aggregated model back to the clients. This process continues until the model converges.

Federated averaging

Federated averaging is a simple and popular approach to aggregating the models. In federated averaging, the server aggregates the models by taking the average of the models. Here is the pseudocode for federated averaging:

function aggregate(w)

return mean(w)

end function

If you want, you can use weighted federated averaging. In weighted federated averaging, the server aggregates the models by taking the weighted average of the models. Here is the pseudocode for weighted federated averaging:

function aggregate(w)

return weightedMean(w)

end function

You can set the weights of the clients according to their:

- Number of samples: You can set the weights of the clients according to their number of samples. The clients with more samples will have more weight.

- Number of iterations: You can set the weights of the clients according to their number of iterations. The clients with more iterations will have more weight.

- Accuracy: You can set the weights of the clients according to their accuracy. The clients with higher accuracy will have more weight.

- Loss: You can set the weights of the clients according to their losses. The clients with lower losses will have more weight.

- Distance: You can set the weights of the clients according to their distance to the server. The clients with a closer distance will have more weight.

- F1 score: You can set the weights of the clients according to their F1 score. The clients with a higher F1 score will have more weight.

Some use cases for federated learning

Healthcare

The data of the patients is sensitive and should not be moved to a central server in most cases due to laws and regulations. Federated learning can be used to train a model on the data of the patients without moving the data to a central server. The model can be used to predict the disease of the patients and can be shared between health institutions to improve the accuracy of the model.

IoT: Internet of Things

IoT devices can produce a lot of data. Federated learning can be used to train a global model by benefiting from the data of IoT devices without moving the data to a central server. Due to the large number of IoT devices and the amount of data they produce, doing the training on a central server may not be cost-efficient due to the large amount of data. Federated learning can be suitable for most IoT applications in terms of cost efficiency.

Smartphones

Google uses federated learning to improve the keyboard on Android phones. The keyboard of the Android phones can be improved by getting benefit from the data of the phones without moving the data to a central server.

and more...

Accuracy of federated learning models

The accuracy of a machine learning or deep learning model depends on a lot of factors, such as neural network architecture, data amount, data splitting technique, optimizer, etc. But, in general, you will get lower accuracy with federated learning models compared to the models trained on a central server. You may try to get similar accuracy with federated learning models if you use a similar neural network architecture, data splitting technique, optimizer, etc. But you may need to train your model for more rounds to get similar accuracy with federated learning models compared to the models trained on a central server. And this will increase the communication cost and training time. Also, it can lead to overfitting.

Future reading

This article is just an introduction to federated learning. I benefited from the following resources while writing this article and you should check them out if you are willing to learn more about federated learning:

AbdulRahman, S., Tout, H., Ould-Slimane, H., Mourad, A., Talhi, C., & Guizani, M. (2020). A survey on federated learning: The journey from centralized to distributed on-site learning and beyond. IEEE Internet of Things Journal, 8(7), 5476-5497.

Li, T., Sahu, A. K., Talwalkar, A., & Smith, V. (2020). Federated learning: Challenges, methods, and future directions. IEEE signal processing magazine, 37(3), 50-60.